Introduction

In this exercise, we will perform straightforward communication using MPI. Ping-pong communication involves sending and receiving between two process ranks. This exercise involves basic back-and-forth MPI communication.

In addition to the ping-pong exercise, this module will demonstrate how to run MPI locally in a Linux environment. Throughout these modules, we assume that all code will be executed on a cluster. However, you may wish to prototype/test your code on your local machine (e.g., on smaller problem sizes) and then transfer your code to the cluster for execution.

Student Learning Outcomes

- Implement several well-known communication patterns: ping-pong, ring communication, and random communication.

- Understand blocking message passing.

- Understand non-blocking message passing.

- Examine how blocking messaging passing may lead to deadlock.

Prerequisites

Some knowledge of the MPI API and familiarity with the SLURM batch scheduler.

MPI Primitives Used

- MPI_Send

- MPI_Recv

- MPI_Isend

- MPI_Wait

- Possibly MPI_Bcast

- Possibly other MPI_Send/MPI_Recv variants

Files Required

Compiling an MPI Program

To compile an MPI program, you must use mpicc as shown below. Flags may vary depending on the system.

mpicc pingpong_comm_starter.c -lm -o pingpong_comm_starter

Creating a Hostfile for Local Execution

The host file lists the hosts that the MPI processes will execute on. Create a host file called myhostfile.txt. Add a single line to myhostfile.txt using the example below. This assumes you want to execute up to 50 processes on your local machine. If you are using a different MPI implementation, please consult the documentation.

- If running Open MPI, add the line:

localhost slots=50. - If running MPICH, add the line:

localhost:50.

If you do not know what implementation of MPI is installed on your system, the following command may output the information: mpirun --version.

Running your MPI Program

The example below shows how to execute your MPI program. In the example, $p=4$ processes will be executed.

mpirun -np 4 -hostfile myhostfile.txt ./pingpong_comm_starter

Problem Description

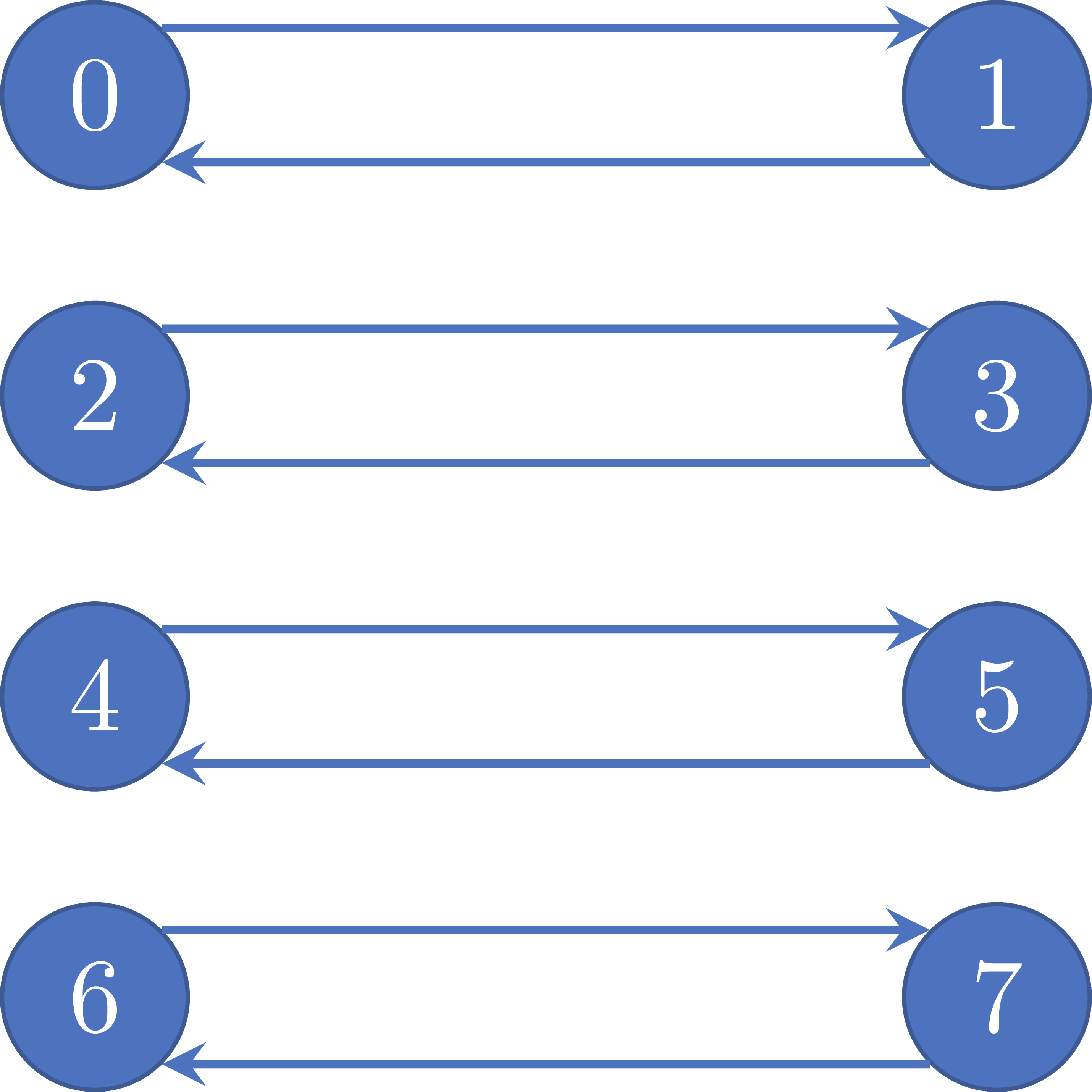

You will implement a program that performs sending and receiving between a pair of process ranks. You will use MPI_Send and MPI_Recv. Figure 1 shows an example of communication between $p=8$ total process ranks, where ranks 0 and 1 communicate with each other, ranks 2 and 3 communicate with each other, and so on.

Programming Activity #1

Program the pong-pong exercise described above. Note the following guidelines below.

- Use and modify the starter file:

pingpong_comm_starter.c. - Only use the

MPI_SendandMPI_Recvprimitives. - The program will take as input a variable number of process ranks. Make sure your program exits gracefully if an odd number of process ranks are entered. The program only needs to perform ping-pong communication with an even number of process ranks.

- Each time a rank sends to its neighboring rank, the data should contain the sender’s process rank. E.g., rank 2 sends the integer value 2 to rank 3. And rank 3 sends integer value 3 to rank 2.

- Each time you receive from your neighboring rank, add the value to a local counter. For instance, rank 2 will add 3 to its local counter once it receives the messsage from rank 3.

- Each process rank should send and receive 5 times in total.

- After each process has sent and received 5 times, the rank should output its value stored in the counter and exit gracefully.

- All even ranks should send first, and the odd ranks should receive first.

Figure 1: example of communications between pairs of process ranks, where there are $p=8$ total ranks.

- Q1: What does process rank 5’s counter store at the end of the computation?