|

"It's All in Who You Know:" Qualitative Population and Sampling Procedures

Dear Cyber-scholars! We've established the rationale for the qualitative approach, and also compared it with more traditional quantitative/experimental models. In addition, we've skipped ahead to the data analysis and reporting to examine some strategies for compiling qualitative findings and results.

Now, let's backtrack to the remaining elements of the research plan. Today, we'll concern ourselves with the "who/what" part: namely, population and sampling methods.

At this point, I'd like to suggest that the "more traditional" labels for population and sampling procedures also apply to qualitative research. For those of you who didn't take Intro to Research with me, I'm going to provide you with a link to the related Module #5 below.

http://jan.ucc.nau.edu/~mid/edr610/class/sampling/procedures/lesson5-1-1.html

Population and Sampling Procedures (EDR 610)

Sampling

In this chapter, we'll first take a look at some important distinctions in the nature of the population for qualitative vs. quantitative studies. In doing so, we'll visit Robert K. Yin's distinguishing terminology of "statistical generalization" vs. "analytic generalization." Then, we'll conclude with some specialized sampling terminology that is particularly appropriate for qualitative research studies.

I. The Nature of the Population: Some Key Differences in Thinking (between

the quantitative vs. qualitative approaches)

As a reminder to our Intro to Research friends, we learned that the population

is that totality (usually persons, but could be 'things' like curricular

materials, schools, clinics, etc.) to which wish to generalize or project

our sample findings.

As you'll see (or will recall) from related discussion you may have had in Intro to Statistics:

- We define our totality of interest; e.g., the population;

- We then proceed to select or draw a sample from that population -

the sample represents the "cases" (again, usually persons,

but not always) that we actually include in our research study; and

- Finally, based upon the findings and results from our sample, we then

project back or generalize these sample findings to our population.

Say, for instance, that we find an average difference in science achievement

score between the 6th-grade boys and girls of our sample. How confident

can we then be that this gender-based average difference in science

achievement would be expected to also hold true for the entire population

of 6th-graders from which we selected or drew our study sample?

Now ... in both of our "Intros," research and statistics,

we learned that if we select the sample randomly or probabilistically

from that population, we can then go on to generalize the sample findings,

if they are quantitative, with a certain degree of confidence to that

entire population. We learned to make statements such as "The

researcher can therefore be 95% confident that the average science achievement

scores will differ for the 6th-grade boys and girls in the population

at large."

We learned too that a probabilistic sampling procedure means

that every sampling unit (again, usually persons, but not always) had

some known, positive, non-zero (not necessarily equal) chance of being selected

into our sample. For this to happen, everything hinges on a detailed,

clear, operational definition of our population. This is so that we can

be able to list all 'elements' of that population; e.g., we'll know "who's

in and who's out." We didn't miss any eligible sampling unit, nor did

we double-count anyone. This, then, is the basis of that "everyone

had some known chance of being drawn."

Conversely, we learned that there will always be a margin of error,

called our alpha or Type I error, that the "quantitative effect"

(e.g., mean difference; correlation) that we observed in our sample is just

a 'sampling fluke,' but in reality there is no such "effect" (e.g.,

mean difference; correlation) in the population at large. But we learned

that we can bound this "risk of wrongly assuming an effect for the

whole population," or Type I error. Commonly accepted Type I error

risk factors are 5% or 1%.

Well -- this type of classic, quantitative inferential generalization is

fine and dandy -- but it may not be what you are looking for in qualitative

research! Qualitative design methodology expert Robert K. Yin refers to

the above probabilistic selection of subjects and generalization of quantitative

results as statistical generalization.

Yin has identified a totally different rationale and purpose for qualitative

research. He calls it analytic generalization. In analytic

generalization, according to Yin, you are looking not to generalize a single,

limited quantitative finding across many cases. Rather, you are seeking

an in-depth, rich, contextual understanding of a phenomenon.

-- > Think "fox vs. hedgehog"

back from our Module #1 and you'll have the right idea!!!

For analytic generalization, then, you are not seeking to project a

given limited finding across hundreds or thousands of cases with a specified

level of confidence. In fact -- you may not even know yet for sure what

the "theory," model, or finding is -- that is why you are

doing the exploratory qualitative work in the first place!

Thus, issues like "with 95% confidence," "Type I error,"

"p-values" and the like are of little or no importance - yet.

We are not that far in our understanding of the key variables, factors

or phenomenon of interest.

- As a result, then, for analytic generalization, probabilistic, random-sampling

schemes may be quite undesirable and counter-productive!

That "average" or "middle-ness" may be quite uninteresting

to you! You may need, and want, to pre-target the extreme cases! e.g.,

schools with "poor" interpersonal climate as compared to schools

with "good" climate so that you can "max out" the factors

that seem to be producing the difference and smoke them out more easily!

- Yet another related, key difference: you may not need, or want, "large"

samples for analytic generalization!

Again, your focus, like the 'territory' of the hedgehog, may be to 'stay

in a single spot' and acquire a detailed understanding of the factors

that drive that particular school, clinic, office, vocational rehabilitation

training center, and so forth. Your own goal for the time being is not

to identify factors that will generalize or apply to other such schools,

clinics, etc., for now. You may be doing the study to solve an immediate

and pressing problem at that school, clinic, etc. If what you find is

helpful in other similar settings, that is of course "gravy",

and what Yin refers to as our next step: cross-case analysis. But it

may not be your immediate need or focus for now. So for that reason,

you do not need to worry about large samples of certain minimum sizes.

Our Intermediate Statistics partners will also recall that we learned

some rules of thumb for desired minimum sample sizes in connection with

many inferential or analytic statistics, such as the independent-samples

and matched-pairs t-tests. You'll recall that one reason for such minimum

sample sizes is to help ensure "a good mix on all potential key

intervening variables" so that we can generalize the quantitative

findings confidently to our target population. Here, too, such rationale

is much more applicable to statistical generalization of limited quantitative

findings across large target populations, than it would be to gather

masses of "often messy" exploratory-type qualitative data

and use it to begin to make some sense of a broad phenomenon of interest.

So the larger sample sizes may not be needed for the latter.

-- > Think of the Donald Duck

addendum in our Module #1! More information times fewer subjects (qualitative)

balances out against Less information times more subjects (quantitative!)!

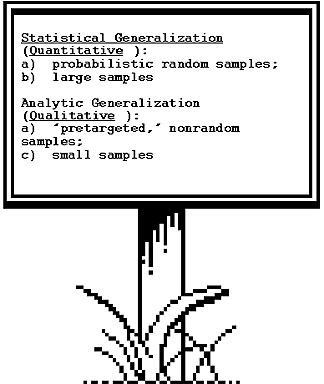

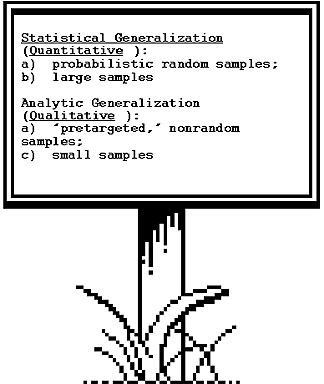

We might summarize the two major themes and points of distinction as

follows:

II. Some Specialized Qualitative Sampling Terminology

Please remember that qualitative procedures can be combined with quantitative

procedures! These are known as multimethod designs. Also, please recall

that qualitative approaches may on occasion be applied in a "theory-testing"

pseudo-experimental sense -- e.g., when the researcher is working with established,

well-validated models of behavior and phenomena and wishes to collect non-numeric

evidence on these models. Thus, as mentioned earlier, we can apply any and

all of the "more traditional" sampling terminology (please see

related http://jan.ucc.nau.edu/~mid/edr610/class/sampling/procedures/lesson5-1-1.html

Intro to Research Module 5) to qualitative studies, as well!

Nonetheless, given the key distinctions between "statistical"

and "analytic" generalization, some specialized sampling terms

have come into use with qualitative designs. The following come to us

courtesy of Michael Quinn Patton - not only a true "evaluation

research guru," but someone with a genuine "zest" and

"passion" for all things qualitative! I can't think of a finer

source! Here we go for some add'l. qualitative sample-selection buzzwords:

- Extreme or deviant-case sampling. As indicated earlier,

the "middle-ness" may actually not be all that interesting

or revealing for what we want to know from our qualitative data. Instead,

the extremities may be much more useful to us. Suppose we focus

on a clinic that has a reputation for "efficient" service

of clients, and compare it to one with long waiting lists. We would

study each one intensively and then kind of reason "bass-ackwards"

to discern those factors or variables that seem to be responsible for

the differences we observe. This, then, is the idea behind such pretargeting

of "extreme" cases. By maxing out differences, we hope

to have a better handle on discovering the 'causes' (in a non-experimental

sense, of course, but maybe even more valid!) of such differences.

- Maximum-variation sampling. This strategy is sort of

trying to "have your cake and eat it too." In other words,

remember the idea behind statistical generalization? You want to get

'as good a mix on as many other factors as possible' so that you can

more confidently project or generalize your findings regarding the single

phenomenon of interest back to a target population. That is, you want

to say, "there was a good mix of gender, ethnicity, aptitude level,

etc., etc., across both groups. So if I still see greater learning going

on with the hands-on peer-assisted teaching method, I can rule out these

other factors and more confidently attribute the difference to the teaching

method itself."

Well, normally a probabilistic (random) sampling procedure of "lots"

of cases from an also-large target population is a good way to help

ensure such a "mix." But what if you don't have the luxury

of "large" samples and/or the desire or ability to choose

them at random? Well, you can try and "purposefully build in"

as much diversity as possible into your selection of your more limited

number of cases for your qualitative sample. For example, if you are

selecting clinics from a centralized statewide web or network of such

clinics, you might make sure you draw from rural, urban and suburban

settings. In addition, you might try to 'sort of control for' experience

by picking a relatively new clinic for every "old," established

one, however you define "age" of clinic to be. And you could

continue the scenario by trying to match on still other key variables:

e.g., funding sources, type of clientele served, and so forth.

This, then, is the general idea behind maximum-variation sampling. You

are attempting to build in diversity on certain key factors or variables

in order to hopefully give you at least some generalizing power over

those factors, as well as to look at the impact they may have on your

phenomenon of interest.

- Homogeneous samples. This is in a way the exact opposite

of the preceding (#2, in the preceding discussion). You are pre-targeting

a subgroup as "alike" as possible in order to study it intensively.

You would thus be staying within rural new clinics of X funding level,

etc., etc. This strategy is particularly useful when you are doing focus

group interviewing - a data collection procedure we'll be talking more

about in future lesson packets.

- u. This strategy is particularly useful when your goal is

to characterize for outside readers the classic "typical"

subject, case, etc. For our Intro to Statistics friends, then, it

might involve determining the modal (most frequently occurring)

category and then hand-picking (perhaps with the help of "cultural

insiders" or "key informants") a subject who fits "that

typical" profile. For example, I suppose that records could be

used to determine the "typical" NAU undergraduate student

-- which would be the modal major? socioeconomic status? gender? ethnicity?

place of residence? etc., etc.? Such a student could be selected and

then interviewed, surveyed, etc. Keep in mind, though: "typical"

for purposes of informing outsiders "what is typical?" does

not necessarily imply "therefore, generalize what you find from

this 'typical' case, or cases, to all NAU undergraduate students."

So, once again, there is a key difference between "statistical"

vs. "analytic" generalization.

- Critical case sampling. While this one may also at times

be equivalent to the "extreme" or "deviant" case

sampling that we first encountered in # 1, above, this is not necessarily

the case. For critical case sampling, we pretarget a "key"

individual, subgroup, setting, etc., that is particularly relevant to

our study. For example, media experts may draft some sample TV and/or

print advertising regarding a candidate for political office. They may

target the "well-educated" citizens of a community as the

focus of their pilot study of the understandability of these test ads.

Members of this "well-educated" subgroup might be shown the

ads and then invited to participate in a focus group or individual interview

session designed to gauge these subjects' reactions to these ads. For

if the "well-educated" citizens of that community, however

operationally defined, cannot understand the message or basic premise

of these campaign TV and print ads, then it's a likely bet that they

will not go over too well with the general populace, either. Thus, one

can consider these critical cases as somewhat akin to the "purposive"

or "judgment" sampling procedures discussed in the Intro to

Research population and sampling packet addendum. As with typical case

sampling in # 4, pg. 9, our goal is not necessarily to generalize to

all subjects, but rather to obtain the in-depth information and understanding

regarding these key cases, subgroups, etc.

- Snowball or chain sampling. This is pretty much as discussed

in our related Intro to Research lesson packet. By using subjects

to help locate other target subjects, we may gain better access

to such specialized, information-rich, limited subpopulations than we

would relying on our own resources alone.

- Criterion sampling. This too may be considered synonymous

with "judgment" or "purposive" sampling, as per

our Intro to Research population and sampling discussion Module

#5, EDR 610 learning materials. It involves needing to pre-target

and include those subjects, cases, etc., which meet certain key selection

criteria. These could be, for instance, "Career Ladder teacher

incentive programs in rural Arizona communities." Such inclusion

criteria may be critical with regard to the phenomenon that we are trying

to study qualitatively. Thus, rather than take the chance that they

will come up in a random draw, we need to "explicitly go after"

them in our selection of qualitative sample subjects, subgroups, settings,

cases, etc.

- Confirmatory and disconfirmatory cases. This involves

the first "baby steps" of trying to generalize analytically.

It is akin to Robert Yin's 'cross-case analysis,' with one slight twist.

Suppose we have studied a school

system, as one of our former EDL doctoral candidates Dr. Dee Dee Nevelle

did for her dissertation research, to identify the factors that appear

to contribute to "positive school climate." The next step

for Dee Dee, and/or others who wish to pursue her line of research,

is to:

- Locate similar types of schools that also appear

to be "positive climate" schools and check to see

if those factors that Dee Dee identified also appear to be present

in these other "positive climate" schools -- e.g., "confirmatory

cases:" but also:

- Play "devil's advocate" with yourself! and really road-test

your findings from the other extreme. That is -- now

locate schools that have been identified as "poor"

or "negative" climate schools. Are those factors

that were identified by Dee Dee Nevelle absent in these negative

climate schools? or on the other hand, are they also present

here -- in which case we have some contradictory evidence to deal

with regarding their original, presumed linkage to "good climate"?

-- e.g., "disconfirmatory cases."

By replicating (confirming) and looking at what happens at the

other extreme (disconfirming), we are approaching the beginnings

of theory testing and validation of our initial qualitative findings.

This lends validity to these initial findings and results.

- Convenience sampling. This too is as discussed in our

Intro to Research population and sampling Module #5. It involves pre-targeting

particularly accessible sites, subjects, etc. So much of qualitative

research is highly dependent on gaining access to the site or field.

Thus you may be at the mercy of "gatekeepers" or "key

informants" that will allow such access.

As you've probably figured out, there is some overlap in the preceding

list. Also, as with the more traditional population and sampling labels

from our Intro to Research Module #5, it is very likely that a given

research study will utilize more than one of these procedures.

* * *

We'll continue our qualitative sojourn by proceeding into some strategies

for collecting qualitative data! 'Till next time, dear friends, remember

that access is everything .. !!!

Once you have finished you should:

Go on to Assignment 1

or

Go back to Qualitative Population and Sampling Procedures

E-mail M. Dereshiwsky

at statcatmd@aol.com

Call M. Dereshiwsky

at (520) 523-1892

Copyright © 1999

Northern Arizona University

ALL RIGHTS RESERVED

|